Anatomy of a Website - How it Works and Why it Might Not

A Little Background

Today we are going to focus on how a website works, share an overview of a hosting environment, and explore some other aspects of how a website functions.

Back in the old days, a website was a simple text document located in the server’s files. HTML let webmasters define the formatting on a website with the flexibility comparable to an advanced text editor, such as MS Word and allowed them to display images, and eventually other media.

The most crucial aspect of the resources it relied upon was bandwidth - the computer’s processing power was not nearly as relevant. All it took for the server to provide you with the website’s content was to get the files and send them to your browser. HTML did have one major flaw however, the websites were static, which meant that only site owners were able to modify site content.

A major evolution, later called Web 2.0, moved beyond static HTML pages that didn’t allow visitor input (comments, posts), and introduced a concept where you can leave your feedback for others to read and reply to. That concept heavily relied on dynamic pages on the site. The result required developers and webmasters to switch to the PHP programming language, which brought a lot of features to the table, but also put a sudden pressure on the industry to focus on the server’s processing power - many websites turned from a light document into a resource-hungry application overnight. With the advent of Web 2.0, servers with slow CPU’s that were more than enough for multiple static HTML websites with heavy traffic were doomed, because just one site switching to PHP could bring the whole server down.

A website’s general structure went through some changes as well. The age of Content Management Systems, applications that have numerous modules had arrived. Content could easily be added and taken away, and the same was true of functionality as well thanks to extensions/plugins/modules that could be utilized by modern CMS such as Joomla and Wordpress. These changes can be easily compared to operating systems. DOS in the 80’s could only work on a single file, just like many simple websites in the early 90’s required only one index.html file with the code and maybe a few images in separate files. Today we use Windows 7/8/10 which consists of tens of thousands of files, much like a modern Joomla!/WordPress website.

Another change is the way websites store and provide data. With a lot of information to record, databases were introduced. They allowed the developers to keep the “application” (stored in files) separate and the user data (articles, comments, registered users information), which was kept in the database for simpler management. And yet again, it put some pressure on the servers, but this time it was in terms of storage. Platter hard drives were reliable, but their speed, especially in places where millions of small files are accessed very often, made websites load slowly under high traffic. Demand for high-speed storage resulted in a wide variety of RAID arrays (multiple hard drives acting as one storage container), and eventually adoption of SSD technology.

In the meantime, to reduce the load on the servers, multiple caching technologies were invented. These made the server generate the site’s code once and keep it in the RAM memory of the server. This way it only had to fetch the “dynamic” part of the website, usually from the database located on a hard drive. That helped with the processing power, but did not address memory usage on servers. Switching to PHP didn’t help either - RAM, just like in desktop computers, was already being used extensively to store the application’s data, as it had to pull information from storage, process it (at that time it used memory), generate code, and then free up the RAM it used. Today, 100+ gigabytes of RAM being utilized not an uncommon sight.

Hosting companies had to adapt very quickly. A typical server hosted many websites, and if there were any limits at all, usually it was the bandwidth that got throttled. The rule of thumb was the more bandwidth, the more traffic and no one looked at other resources. That was both good and bad - if a website required a lot of CPU or RAM to generate a page, it just had it, so a webmaster didn’t have to worry about his site working slowly until he got an email from an angry sysadmin. As time went by, average CPU and RAM usage increased to the point where there was not much left - a traffic spike on one website (a commercial on TV or an event where the site’s address is advertised) would bring the whole server to a halt because it was under heavy strain from the data going back and forth. And, obviously, an email from an angry sysadmin. Limits were introduced one after another to protect a server from being taken down by a single website and to ensure that all sites get their fair share of resources.

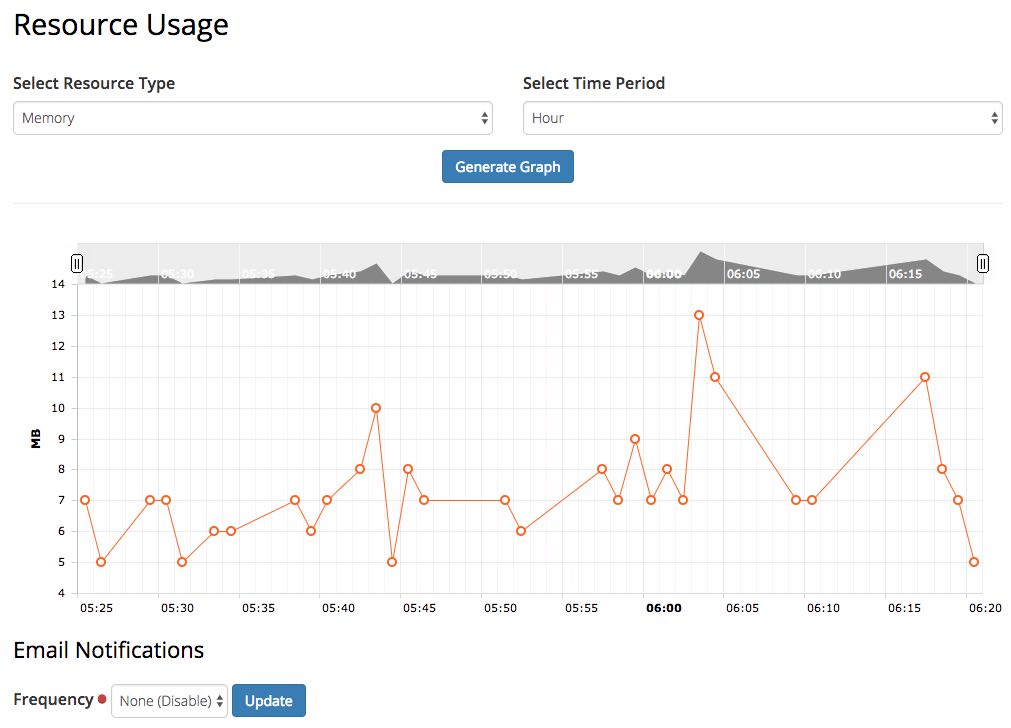

Today, with a hosting instance, you get a specific amount of processing power. It’s good to know how much of it is being “spent” and monitor it once in a while. If you have a website with us, you can easily monitor resources and get graphs for last hour, day, week, month - it helps a lot to see if a marketing campaign causes spikes in CPU or RAM usage.

If you see any indications of your site exhausting most (or all) available resources, you can always add more by purchasing a Compute Booster for your site. This will allow the site to handle traffic spikes, such as marketing campaigns or social media posts that unexpectedly went viral - with just a few clicks in your CCP you can ensure that visitors will get the information they are looking for.